Entry 3: Correcting Global Pose Estimation

❌ Intrinsic Matrix Error

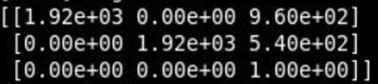

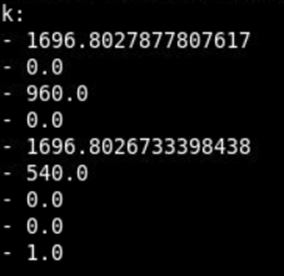

After looking at posts in the forum, I have seen that it had been indicated that the intrinsic parameter matrix of the actual camera

(when running ros2 topics echo turtlebot3/camera/camera_info) does not match what is indicated in the guide.

Below I show the intrinsic parameter matrix calculated according to the guide:

And here is the matrix K retrieved directly from the ROS2 topic:

The calibration difference is not significant enough to fully explain the observed errors in the pose estimation. So, while I have applied the correction, I continued investigating other potential issues in the process.

🧠 Initial Diagnosis

Since the first attempt, the estimated position of the robot didn’t align with its real location in the simulated map. Even after correcting the camera intrinsic matrix, the estimated pose was still wrong. This indicated a deeper conceptual issue: the transformations between coordinate systems were not being composed correctly.

📉 Nature of the Error

Upon close inspection, I realized that solvePnP estimates the tag's pose in the camera’s optical coordinate system, where:

- Z points forward

- X points right

- Y points down

This differs from the physical coordinate system of the AprilTag and the robot, where:

- X points forward

- Y points left

- Z points up

Ignoring these differences led to misaligned and misoriented pose estimations. To correct this, I built a transformation chain that includes:

-

Physical Tag → Optical Tag

First, a fixed transformation is applied to adapt the physical tag's frame to OpenCV’s expected optical frame:T_tag_to_tag_optical = np.array([ [ 0, 0, 1, 0], [-1, 0, 0, 0], [ 0, -1, 0, 0], [ 0, 0, 0, 1] ]) -

Optical Tag → Optical Camera

Obtained fromsolvePnPand inverted to express the camera relative to the tag. -

Optical Camera → Physical Camera

Inverted transformation to move from the optical to the physical system of the camera. -

Camera → Robot Center

The physical offset from the camera to the center of the TurtleBot, based on the robot’s model:T_cam_to_robot = np.array([ [1, 0, 0, 0.069], [0, 1, 0, -0.047], [0, 0, 1, 0.107], [0, 0, 0, 1] ]) -

World → Physical Tag

Built using the tag’s pose (position + yaw) from theapriltags_poses.yamlfile.

📏 Tag Size Correction

I had previously noticed this but overlooked its importance: the full tag size is 0.3, but I was calculating the corners based on the black square inside the tag, which is only 0.24. Correcting this parameter was crucial for the final accuracy of the pose estimation.

✅ Results after changes

With this corrected approach, the robot now appears exactly at its real position from the very first frame, and the estimated orientation aligns consistently with the camera’s perspective. The following image demonstrates this:

The next step will be to start moving the robot and incorporate odometry for localization when no tags are visible.